BGP Confederations:

With confederations we

partition the whole AS network in sub-AS, without needing to build a full-mesh

topology between all the BGP routers involved in the network. Each

confederation, or sub-autonomous system, will have a full-mesh BGP topology

between the routers forming the confederation. But between confederations, the

behavior will be more like eBGP sessions with some differences (in fact, it’s

called Confederation BGP, cBGP). Each confederation has a different sub-AS

number, usually a private one (from 64512 to 65534).

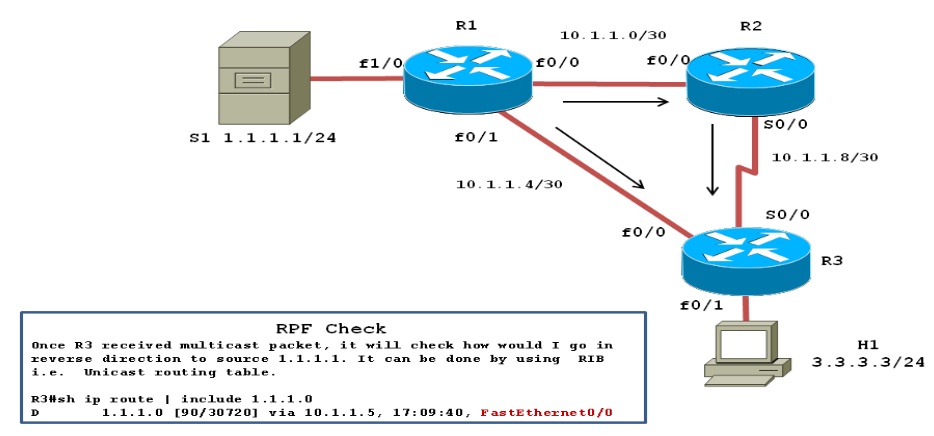

The topology we will focus on

its as follows:

Two

things are necessary to build a confederation:

- The

private sub-ASNs that will be used in the confederations (64990 and 64991

in our topology)

- The

ASN that the whole confederations system will assume towards true eBGP

sessions (2222 in our topology)

Routers

in confederations can have 3 types of BGP sessions:

- Regular iBGP sessions:

with routers inside the same sub-AS. All these routers having iBGP

sessions must have the same ASN to identify the BGP process (64990 and

64991 in our topology). Furthermore, it’s necessary to build a full-mesh

iBGP topology inside each sub-AS. All the rules for iBGP sessions apply

here.

- cBGP sessions:

the BGP session built between sub-AS is called cBGP (confederation BGP),

and its characteristics are a mix between iBGP and eBGP sessions. The

topology between sub-AS doesn’t need to be full-mesh.

- Regular eBGP sessions:

the whole confederations system behaves as a unique ASN towards eBGP

sessions. All the rules for eBGP sessions apply here.

Every

router inside the confederations system must know the ASN of its sub-AS, the

ASNs peers inside the confederations system, and the ASN that the whole

confederations system will assume towards eBGP sessions.

The

configuration of each router in the example is as follows:

Configuration on R1:

router bgp 1111

network 100.100.100.0 mask 255.255.255.0 route-map LOOPBACK

neighbor 20.20.12.2 remote-as 2222

Configuration on R2:

router bgp 64990

bgp confederation identifier 2222

bgp confederation peers 64991

neighbor 3.3.3.3 remote-as 64990

neighbor 3.3.3.3 update-source Loopback0

neighbor 3.3.3.3 next-hop-self

neighbor 4.4.4.4 remote-as 64990

neighbor 4.4.4.4 update-source Loopback0

neighbor 4.4.4.4 next-hop-self

neighbor 20.20.12.1 remote-as 1111

Configuration on R3:

router bgp 64990

bgp confederation identifier 2222

bgp confederation peers 64991

neighbor 2.2.2.2 remote-as 64990

neighbor 2.2.2.2 update-source Loopback0

neighbor 4.4.4.4 remote-as 64990

neighbor 4.4.4.4 update-source Loopback0

Configuration on R4:

router bgp 64990

bgp confederation identifier 2222

bgp confederation peers 64991

neighbor 2.2.2.2 remote-as 64990

neighbor 2.2.2.2 update-source Loopback0

neighbor 3.3.3.3 remote-as 64990

neighbor 3.3.3.3 update-source Loopback0

neighbor 5.5.5.5 remote-as 64991

neighbor 5.5.5.5 update-source Loopback0

neighbor 5.5.5.5 ebgp-multihop 2

Configuration on R5:

router bgp 64991

bgp confederation identifier 2222

bgp confederation peers 64990

neighbor 4.4.4.4 remote-as 64990

neighbor 4.4.4.4 update-source Loopback0

neighbor 4.4.4.4 ebgp-multihop 2

neighbor 6.6.6.6 remote-as 64991

neighbor 6.6.6.6 update-source Loopback0

Configuration on R6:

router bgp 64991

bgp confederation identifier 2222

bgp confederation peers 64990

network 166.166.166.0 mask 255.255.255.0

neighbor 5.5.5.5 remote-as 64991

neighbor 5.5.5.5 update-source Loopback0

We must pay attention to the

BGP session between R4 and R5. They belong to different confederations, so the

BGP session that they build up is a cBGP. This means some

rules from eBGP apply here. And one of the rules is the bgp-multihop

limit of 1. In order to build a BGP session between routers of

different confederations, we need to use either the interface IP or the command

ebgp-multihop 2.

Now, let’s have a look to the

AS_Path attribute inside the confederations system. Let’s check how the

Loopback 100.100.100.1/24 from R1 is seen by R6:

R6#show ip bgp

BGP table version is 5, local router ID is 6.6.6.6

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*>i100.100.100.0/24 2.2.2.2 0 100 0 (64990) 1111 i

*> 166.166.166.0/24 0.0.0.0 0 32768 i

R6#

R6#show ip bgp 100.100.100.0/24

BGP routing table entry for 100.100.100.0/24, version 5

Paths: (1 available, best #1, table default)

Not advertised to any peer

(64990) 1111

2.2.2.2 (metric 31) from 5.5.5.5 (5.5.5.5)

Origin IGP, metric 0, localpref 100, valid, confed-internal, best

You see that? The new AS_Path

is (64990) 1111. Inside the confederations system, the

AS_Path grows based on the eBGP rule: all the ASN confederations for which the

BGP announcement passes through are added between brackets to the AS_Path attribute.

Well, nice. Let’s see how the

Loopback 166.166.166.66/24 from R6 is seen by R2:

R2#sh ip bgp

BGP table version is 3, local router ID is 2.2.2.2

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 100.100.100.0/24 20.20.12.1 0 0 1111 i

*>i166.166.166.0/24 6.6.6.6 0 100 0 (64991) i

R2#

R2#sh ip bgp 166.166.166.0/24

BGP routing table entry for 166.166.166.0/24, version 3

Paths: (1 available, best #1, table default)

Advertised to update-groups:

8

(64991)

6.6.6.6 (metric 31) from 4.4.4.4 (4.4.4.4)

Origin IGP, metric 0, localpref 100, valid, confed-internal, best

And now, by R1:

R1#sh ip bgp

BGP table version is 8, local router ID is 1.1.1.1

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

*> 100.100.100.0/24 0.0.0.0 0 32768 i

*> 166.166.166.0/24 20.20.12.2 0 2222 i

R1#

R1#sh ip bgp 166.166.166.0/24

BGP routing table entry for 166.166.166.0/24, version 8

Paths: (1 available, best #1, table default)

Not advertised to any peer

2222

20.20.12.2 from 20.20.12.2 (2.2.2.2)

Origin IGP, localpref 100, valid, external, best

As we can see, towards R1 the

info comes from the ASN 2222. All the confederations system topology are hidden

to R1.